Remembering Professor Halcyon Lawrence

On the last day of Black History Month, and the eve of Women’s History Month, I wanted to take some time to tell you about Professor Halcyon Lawrence, who is a part of both of these histories. She was a brilliant, kind, pathbreaking, and warm colleague, and she died suddenly in late October 2023.

It has taken me a while to make these thoughts public because she was such a special person, and I wanted to do justice both to her and her work in writing about her. I know I have missed many things below, but one other reason I feel it’s important to post this is because, thanks to the boom in “generative” AI, hundreds of random sites started posting fake, Chat-GPT generated obituaries of her right after her death. These were full of misstatements and outright falsehoods, but unfortunately many sounded true enough that well-meaning people intending to memorialize her and spread word of her passing accidentally (and understandably) shared them. In this current moment, the hype around AI-generated informational poison knows no bounds, and unfortunately that means that nothing is off limits.

One of the things that was so important to Halcyon in life was making sure that humanity did not get lost in the course of deploying technology, and that people were centered in any conversation about how machines should work. So I am trying to honor that part of her message with this post that pushes back, in some small way, against the inhumane AI-generated text that is starting to take over the web. If you are a person who knew and admired her, please leave a comment to add your memories of, and experiences with, Dr. Lawrence. (There is a moderation delay as I manually approve comments.)

Dr. Halcyon Lawrence was a good friend and colleague whom I had known for over a decade. She was a graduate of the technical communication PhD program (now defunct) at Illinois Institute of Technology, and at the time of her death was an Associate Professor of technical communication and user design at Towson University in Maryland, having earned tenure early. (A Towson graduate has written a very moving remembrance of her here.)

She was an amazing scholar, and a unique and original thinker who studied how linguistic imperialism embedded itself into speech technologies, and how this issue could be averted and countered by both technical and nontechnical means. At the time of her death, she was one of only a few scholars working on this problem, which affects the majority of English speakers in the world.

She was also the kind of person who let everyone else know how much she valued and appreciated them every time she talked to them, and her good example rubbed off on others. As a result, I always tried to let her know how much I valued and appreciated her every time we talked. I was so thankful for how she had taught me to be more open in that way, particularly when I realized, in retrospect, that we had talked for the final time.

Prof. Lawrence was originally from Trinidad and Tobago, and this deeply influenced the work that she did: she focused on linguistics and technical communication, with an especial interest in studying how accent bias in automated technologies opened up new questions and areas of study. She often pointed out that even though the number of people in the world who speak English with a “nonstandard” accent–in other words, not British, American, or Australian–matches or outnumbers the people who do, speech recognition technologies barely take this into account.

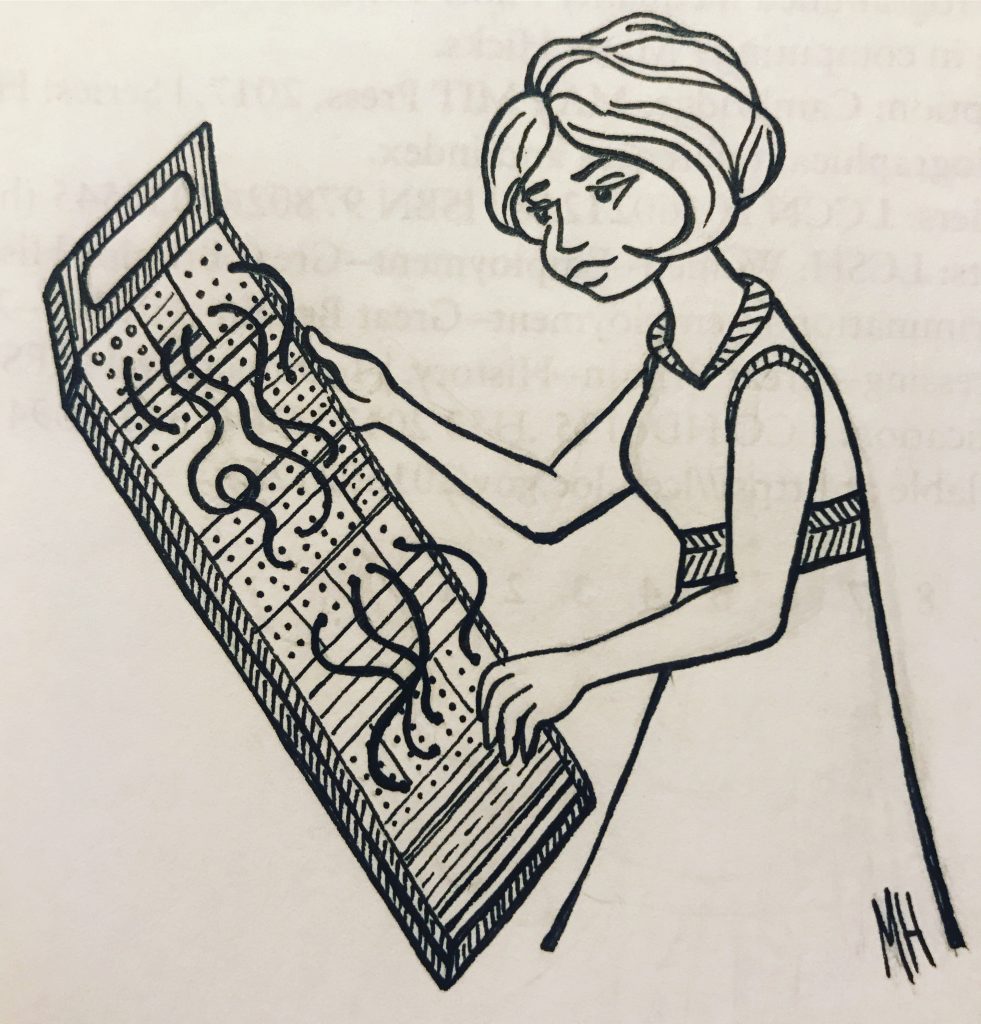

As someone who herself often needed to code-switch to be understood by automated systems, she felt this inequitable part of technological “progress” acutely. One thing she always highlighted was that she could use clear speech strategies to communicate with people who didn’t immediately understand her accent (such as speaking more slowly, hyper-enunciating, etc.), but doing these things with machines was rarely as successful. People can meet each other halfway, while machines have often been programmed to refuse to do so. And this, she pointed out, was an intentional, political, technical choice on the part of the largely white and U.S.-based technologists who initially designed and deployed these technologies. If you’d like to read a chapter she wrote on this topic from a book I co-edited, you can read a preprint for free here: Siri Disciplines. Below, you can see a photo of her presenting this work at Stanford, at one of the conferences that led up to the publication of that book.

Halcyon had the ability to hold audiences rapt when she spoke about her work, both because of what she was saying and because of how she communicated. In the spring of 2023 she gave a talk to my graduate seminar that my students gushed about for weeks afterward. She made her lectures participatory and engaging, always meeting people where they were without giving up where she was coming from. I was so glad to have funding to invite her to come speak to my fall 2023 class as well (via videoconference), and she had been looking forward to it. But when we talked via email just a week before, she knew she was ill and needed time off to rest. She thought she had more time left than just a few short days, so I was deeply saddened and shocked when I heard about her passing. The historian in me recognizes that if there were less structural racism in the medical systems that we rely on in this country, she may well not have ended up in the sudden, dire position that she did.

The memory that seems to resonate for many people who knew Dr. Lawrence is how unusually kind and engaged she was. Halcyon was well known and loved in her field, and many other fields, for her kindness and generosity of spirit, her intellectual fearlessness, and her willingness to mentor, help, and support her fellow scholars. Her razor sharp insights and her dedication to building more inclusive communities and technologies, especially speech technologies, impacted multiple disciplines and powerfully influenced how people thought about the relationships between speech, empire, and technology. Colleagues in her field are in the midst of preparing a special section of Communication Design Quarterly to commemorate her work’s impact, and I greatly look forward to reading it. Even in her lifetime, her work was highlighted in the press for pointing out an important new dimension in the fight against biased and broken tech.

When Halcyon and I were both at Illinois Tech, it was always a treat when she was in her office and free to talk at the same time I had free time. A few minutes of conversation with her could truly light up your entire day. One afternoon, we walked out to the lakeshore in Hyde Park, where she told me about her upbringing in Trinidad and Tobago, surrounded by devices on the cutting edge of computing because of the work her father did. She was always comfortable with technology–just never comfortable with needlessly ceding power and agency to it. She wanted it to be firmly human-centered, and serve people’s needs better. While still a graduate student, for instance, she collaborated on a project to help the Chicago Transit Authority make the stop announcements on the ‘L’ and on buses more easily understood in high noise environments. If you have ridden public transit in Chicago, you have probably benefited from her work.

One of my first meetings with Halcyon is the memory that feels most fitting to end with: I will always remember how, when I interviewed for my job at Illinois Tech, she (as a grad student) was the only member of the department who stayed for the final meeting of the day, to talk to me some more as the sun set and the roads iced over on a cold Chicago winter night, instead of leaving early like all the faculty in the department had. I kept letting her know that she didn’t have to stay just to keep me company, but she truly wanted to stay and talk to me. She valued ideas, but valued the people they came from even more. Her intellectual practice was strengthened so much by her approach to people, and I will always remember the impact this had. Over the years, I have often found myself thinking of how she did things when I am trying to do better. It was an honor to have known her and learned from her.

Rest in peace, Professor. You made such a positive impact on so many.