History of Computing Class, Assignment 1

Students, comment on this post by writing a three-paragraph response to the following:

So far, we’ve discussed the precursors to electronic computing. What are the three most important things we’ve learned?

Please use formal English and write your response as you would a short academic paper. Include relevant, specific historical details to make your points, but remember to keep it concise: this should only be three paragraphs.

Your comment will not show up right away: I will approve the comments after the deadline, once everyone’s had a chance to respond, so as not to bias your answers.

As noted on your syllabus, your comment is due by 10pm on Thursday, Sept. 6. There will be no credit given for late responses or technical difficulties (so don’t leave it until the last minute).

Have fun.

Note: If you see bracketed text in the comments, that represents text I added or text I corrected–in other words, alterations from the student’s original essay. My objective in correcting your posts is to make your blog comments a useful learning resource for the class and anyone else on the web who may come across them later. I want to ensure we put as little misinformation out on the web as possible.

If there are three things I have learned in class so far, they are as follows.

Firstly, when in the pursuit to secure funding, practicality is key. When an inventor lacks practicality, as Charles Babbage did, he is unlikely to get his idea sponsored. Babbage received a sizable sum of money from Parliament to develop his Difference Engine because it was a good idea, promising to revolutionize nautical table-making. But he never fully developed it into the serviceable product for hastening and cheapening nautical table production Parliament desired, because though brilliant, he lacked practicality, and lost sight of Parliament’s objective for his own. This is because by the time he produced a proof-of-concept model of the Difference Engine that could not even fulfill its design purpose – making tables – ten years and 17,000 pounds after his first grant, he asked for additional funding to produce a more sophisticated Analytical Engine instead. Parliament wanted cheaper tables, took a big gamble toward them in Babbage and his Difference Engine, and lost. It would not “throw good money after bad” and sponsor a new machine that could not make tables, no matter how sophisticated the math it was capable of. For Parliament’s purposes, Babbage had proved unreliable, and future funding for his ideas was denied.

On the other hand, Herman Hollerith, though not possessive of Babbage’s polymath intelligence, was practical enough to make up for it. Hollerith worked in the United States Census Office, the largest data processing operation in the country. He also was fond of travel and carnivals, where he saw music for calliopes and physical descriptions of people encoded as holes in punch cards. In the 1880 census and the coming 1890 census, the Census Bureau had a problem on its hands – the volume of responses to the census was such that they could not be processed and disseminated in a reasonable manner and length of time using manual techniques. Case in point, it took just shy of 1,500 clerks using slips of paper and the “tally system” seven years to process the returns for the 1880 census. In light of the increasing rate of population growth, the coming 1890 census could not be processed using current techniques without either hiring a prohibitively expensive number of clerks, allowing processing to continue well into the next decade, or finding a better, faster way to do it. Herman Hollerith, in a stroke of practicality, applied the principle of “punch card photographs” describing people to his work in the Census Bureau by developing a machine that used punch cards to tabulate the collected statistics, thus speeding the census’ completion. His idea worked. Using the Hollerith system, the 1890 census was completed in just two and a half years and at a $5 million savings. Hollerith never struggled for money again in his life, going on to start [the company that later became] IBM, which was later to become one of the leaders in computing, whereas Babbage died unfulfilled and in relative obscurity.

Secondly, if you’re not a good publicist, get one. I will again use Charles Babbage and Herman Hollerith as my examples. Sometimes, intelligence and social aptitude are said to lay at either end of a continuum, inversely proportional to each other. Given the Analytical Engine’s brilliance, it is not hard to guess which end of the spectrum Babbage was closer to; the textbook (_Computer: An Information Machine_ by Martin Campbell-Kelly and William Aspray) goes so far as to call his personality “abrasive” on page 50. Despite this, Babbage was prominent in science and society during his peak because both his ideas were too brilliant to ignore and he was probably not as hard to get along with in reality as the text would make it seem. But in the light of the failure of his Difference Engine and the irrelevancy of his Analytical Engine, Babbage fell from his pedestal, not beginning to pick himself back up until 1840, when he was invited to Turin, Italy to speak about his engines. There he met young Luigi Menabrea, who wrote and published an account of the Analytical Engine. Back in England, Ada Lovelace translated and enhanced Menabrea’s missive. In Menabrea and Lovelace, Babbage gained publicists of sorts, especially to the audience of the future, but it was too little too late, and Babbage never regained the top of his pedestal or built either of his engines, and died isolated and unappreciated. Herman Hollerith, on the other hand, was a good publicist, and an entrepreneur to boot. Though the distance between professor and student was much reduced in Hollerith’s time compared to today, the fact Hollerith’s university professor liked him enough to invite him to work at the Census Bureau as his assistant has to count for something, especially since the only reason Babbage adopted Ada Lovelace as his protege was that she fully understood his engines’ complexity and potential when few did. Compared to Babbage, Hollerith was good enough with the public and the people who worked for him that he was able to grow his idea for a census tabulating machine into a business the size of IBM and he personally oversaw his machines’ operations, thus directly interfacing with the people who operated and maintained them. As a result of his success, Hollerith built a business that was good enough to sell for $2.3 million, and so died a rich man.

Thirdly, disciplines that can benefit from blending or merging, will. On page 23, the textbook states that computers are used in offices for three main tasks; document preparation, information storage, and financial analysis/accounting. Today, one computer can perform all these tasks, but in the late 19th and first half or so of the 20th centuries, this was not so. To type up an order for X widgets, a typewriter would be used. To find out how much the order would cost, an adding machine would be used. Then, a copy of the completed order would be stored in a filing system. As electronic computers became more powerful and stepped into the office, they eventually merged these three functions onto a single machine. Another example of benefit from merging disciplines is the formation of the Remington Rand conglomerate, made up of the Remington Typewriter Company and the Rand Kardex Company. This blending of one of the leading typewriter brands and and the leading maker of record keeping systems allowed its constituent companies to succeed in the computer age, when neither likely would have by themselves. A more recent example within computing of benefit from blending disciplines is the advent of the accelerated processing unit, or APU, the basic principle of which is combining a computer’s central processing unit (CPU), the part that does most of the computer’s thinking, and its graphics processing unit (GPU), which decides what to display on the screen. In practice, this is usually done by moving a small GPU onto the main CPU die. The history of the APU starts in the 80s and 90s, when the GPU became a common feature in personal computers. From then until recently, the CPU and GPU, though linked by electrical connections, were separate. In 2010, Intel released its first generation of CPUs with integrated GPUs. AMD released its own first generation of APUs the following year. Almost all of both manufacturers’ current-generation CPUs now include an integrated GPU. The benefit of merging the CPU and GPU is tangible for the consumer market, has not yet been fully realized. Because they have to share limited space with the CPU, integrated GPUs lag behind external dedicated GPUs in raw performance, but as time goes on and technology progresses, the gap between the two will narrow, and computers will become faster, cheaper, and more energy efficient.

One of the most important things we’ve learned so far is that the advent of the computer age didn’t occur randomly or without foundation. In fact, the fundamental factors that helped bring about the widespread use of modern digital computers were pioneered as early as the 18th and 19th centuries. One prominent example is the story of Charles Babbage and his computational engines. Born of a need for accurate tables for nautical navigation, Babbage convinced the British government in the early part of the 19th century to fund his “Difference Engine,” which he claimed would be able to do the [complex] arithmetic normally relegated to humans–but with much greater efficiency and accuracy. This idea eventually led him to develop his concept of the “Analytical Engine;” a machine that could do any kind of mathematical operation, not just addition and subtraction. The mathematician, Ada Byron, wrote succinctly, “…the analytical engine weaves algebraic patterns just as the Jacquard Loom weaves flowers and leaves,” [in trying to describe how the machine could automate the process of solving complex problems.] History would prove that Mr. Babbage’s ideas were well ahead of his time, but they never saw fruition during his lifetime [due to lack of funding and problems manufacturing pieces for his machines that had the correct tolerances].

His Analytical engine, as he designed it, meant to separate the processes of computation and information storage. This highly important separation would prove paramount near the turn of the 20th century, when budding information industries needed new and more efficient methods of computing and storing large quantities of data. The history of information processing follows closely the birth of IBM and the founder [of its predecessor company], Herman Hollerith. His electronic tabulation system made use of punched cards to record data for the 1890 US census, which in turn were used to tabulate statistical data at a rate much, much greater than anything possible by human endeavor. As a quantitative measure, Hollerith’s system completed the census in two and a half years, versus seven years for the previous census, and saved the government an estimated $5 million. This demonstration proved to the world the efficacy of mechanized tabulation systems.

Electromechanical systems like Hollerith’s eventually led to the development of fully electronic computers, which revolutionized computing and led to a process of exponential growth that brought us into the digital age. However, that does not detract from the importance of machines such as the Harvard Mk I, which produced, for the first time, the very sort of calculations Charles Babbage envisioned his analytical engine making a century previous. Funded and built by IBM and at the direction of a researcher at Harvard University named Howard Aiken, the Harvard Mk I presents the third and final lesson of importance. Perhaps the most important history lesson to take away from this is the role of public perception to technological advance. Harvard Mk I represented, to the public and the scientific community, the dawn of an era of “robot brains” and mechanical minds. Not long after its inception, computers with the ability to process information at speeds thousands of times greater came out of the foundation laid by that machine [What exactly do you mean by this?]. It marked a convergence of technology that sparked an new industry; one that continues to grow to this day.

Taylorism is one of the most important topics we have covered so far, as it laid the groundwork for most modern day time-management systems. Taylorism, a very mechanical and business-minded system, focused on mastering individual parts so that the whole worked more efficiently. This idea has evolved over time into modern day management systems. [Though it started in] industries such as mass production factories, [it came to also define how white-collar clerical work was done].

Another important topic we have covered is the ENIAC. This technological marvel revolutionized the concept of a computer at the time, and it continues to show how advanced technology can become. The ENIAC showed that computers could serve mankind in multiple facets of human life, as it added military capabilities to the existing business possibilities of computers [because it vast speeded up what computers could do by using electronics. Now, computers were useful for time-critical operations–theoretically. The ENIAC was unreliable early on, and in fact was not even completed soon enough to be of much use in the war effort]. Also, the ENIAC foreshadows a paradigm shift in the balance between humanity and machines. In the 17th century, people were computers, but with the creation of the ENIAC (a “thinking” machine) the possibility was introduced that, eventually, computers [could do the work people used to do].

Yet another important topic was the Analytical Engine, [first thought of by Charles babbage in 1834]. The Analytical Engine is important in and of itself, as it [theoretically] allowed for the quick computation of nearly any mathematical problem. [As a result,] this machine was a predecessor of the modern computer. However, what makes the Analytical Engine truly significant is not what it did, but rather what it didn’t do. Charles Babbage had the idea for the Analytical Engine while he was creating the Difference Engine, which, along with financial issues, led to his failure to complete the Analytical Engine before his death. If, however, the machine could have been built during his lifetime, its impact on business, leisure, and the future itself would have been near infinite. The Analytical Engine [begs us to] ask the question: “how many technological revolutions could have occurred if we hadn’t passed up the chance?”

The need for machines that accurately tabulated values, and more importantly printed them properly, started the drive towards mechanized computing in Great Britain. This was catalyzed by Charles Babbage’s push to convince the prime minister to fund his Difference and Analytical Engines, the foundation of automatic computers. Around the same time, telegraphs helped allow quick transactions and [aided in the advance of] industrialization in Great Britain. Because Great Britain was increasingly becoming a hub for computing, more and more development occurred there. This was crucial to further developments, including the code breakers during WWII [who used electronic computers to decrypt intercepted German messages].

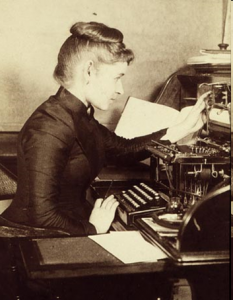

[In the late 19th and early 20th century] Taylorism started to grow in the United States. This concept that complex tasks could be broken down into simplistic, steps [that used nearly interchangeable workers] who would not need much training spread fairly quickly amongst the industrial powerhouses. Automated tools [facilitated this system and helped it become more] developed. The increasing feminization of the industrial workforce, which provided cheap, consistent labor, encouraged companies to further develop computing machines [to feminize the office labor force as well].

This increase in computing [had a major effect on “white collar” jobs which were greatly increasing number]. [Since] office jobs were on the rise, companies looked for way to keep labor cheap. Inventions such as the Remington Rand typewriter and Felt and Tarrant’s Comptometer lead to an increase in office computing equipment. Eventually, advanced computing systems like IBM’s 405 Electronic Accounting Machine paved the path for companies to switch from manufacturing simplistic office machines to computers that could perform much more complex tasks. This would play an important role in driving electronic computing forward.

In history of computing the first important issue we discussed was the issue where the computer stemmed from. The computer as we know it today is a device that runs our society. Before computers were what they are today, they were actual human beings, doing computations. It was a job–a career instead of a device. The importance of [human computers] in history helps us understand the social impacts on society once actual computation devices started to be created.

Another large issue we discussed is the reason computation devices were being created in the first place. It seems like common sense now that forty human computers in a room is not the best way of doing computation. It costs money, and it takes time. This is the exact reason computation machines started to be created. Babbage started working on the Difference Engine in around 1822. He received funds from the government to do so. The Difference Engine was never built due to Babbage changing course [and designing] another machine [called the Analytical Engine] that could compute even better then the Difference Engine, [because it could theoretically do any calculation]. These machines were the beginning of what today we call computers. This idea of creating a computation machine stemmed from the idea of being more efficient [by doing work faster and more accurately].

Money is the next issue that is extremely important in the development of the computer. Money has been a central issue in every development [since the late 19th century]. Companies and the government funded scientists to create computation machines to become more efficient which in turn creates more money for companies [and better outcomes for government projects]. Companies like IBM and Remington Rand adapted to the needs of Society, but mainly they were looking to make money. Remington Rand adapted from making guns in the Civil War to making typewriters for thousands of companies. IBM in 1928 was a 20 million dollar company, but eventually turned into the leading company in the development of the computer. The adaptability to societies’ needs is what creates revenue in companies like IBM and Remington Rand.

So far we have seen how different applications of computing machines within industry and the military complex have influenced design in the early versions of the computer. Starting with Babbage’s Difference Engine which was one of the first mechanical machines designed to calculate [nautical tables for the British government]. Later improving on his design, Babbage then designed the Analytical Engine. This would be the first of three important computing concepts we have studied so far. Babbage showed one of the first steps of computing which is the processing of information [by a general purpose machine that can theoretically do any calculation.]

Another example is the typewriter sold by Remington Rand [in the last decades of the 19th c., which was the most successful version of the technology to this point]. This mechanical marvel represents two different concepts involved in future computing. One is data entry and the other is copying of data. This machine was used mainly in industry and was one of the top office supplies purchased during the early 20th century.

Lastly, the punch card tabulating system used in the census [of 1890] was an early start in [large-scale] data processing and storage of information in computing. This was not only one of the first ways to tabulate and process data using a machine but also a much faster more efficient way to process data [that went far beyond the speed of] human computing [and greatly reduced the computing costs of a large scale project].

Throughout the course of the class we have learned a lot about the precursors to electronic computing, and the history of events that eventually led to the creation of electronic computers. One of the most important things I think we have learned was that computers have existed for a long time before the invention of electronics, and have even existed before the invention of simple adding machines. Throughout history a need for complex calculations has existed, from nautical charts to census information. The term computer used to refer to people who did these calculations. This shows that there was a need for things that modern computers do long before their invention, and that modern computers were not [a shocking 20th c. invention] but something that was in demand [for a long time to] speed up many processes.

Another important thing we learned [about was systematization of large-scale date processing using people, through] the methods of de Prony, the Banker’s clearing house, and Frederick Taylor’s techniques. These methods for completing a process showed the efficiency that could be achieved by breaking a large task into [many smaller, easier] parts and giving these tasks to many different people. This was similar to to factory style [industrial] labor, and it also lead to mechanization, as it showed engineers and inventors that similar tasks could be done using machines, as long as the work was divided down into smaller parts that were simple and repeatable.

One of the most important things we learned was how these different precursors to electronic computing have affected society, especially in the realms of labor and capital. The mass production of the typewriter, allowed for more women to enter the work force and increased the number of white collar jobs in relation to physical labor. Spending in office equipment increased steadily over the span of the early 1900’s to the 1940’s and as a result money was also spent on training people to use these equipment. [This meant that…]

The second point is another interesting thing I remember in the class. But there was a cons to this that was mentioned in class which was the fact that such a technique makes the tasks boring and does not give room for acquiring more knowledge because a person will be doing the same thing over and over again.

Of all the material on the precursors of modern computing covered in this course so far I feel that the most important things we learned are the early computing machines. Machines like the Difference Engine, the Analytical Engine and the Mark 1 [These are seperated by more than 100 years and significant differences in technology… might you want to distinguish between them a bit more, rather than writing about them as though Babbage’s machines and the Mark I are very similar?]. These machines have formed the foundation of our modern computers. If these machines had not been invented we probably would not be where we are with today’s computers.

The Difference Engine, built in 1833, is important because it was the foundation for computational machines. Although it could only do basic calculations it led to further advancements such as the Analytical Engine which could theoretically do much more advanced calculations. Even though the Analytical Engine was never built it inspired further development in the field.

These machines eventually led to the Mark 1 which was an electromechanical machine which could be programed [using a combination of machine code and reconfiguring the hardware the machine]. It was installed in February 1944 at Harvard and although it was significantly slower than electronic machines it still holds an important place in history [because it helped create and educate programmers who went on to have major impacts in the field of computing and the development of programming techniques–most notably Grace Hopper]. If it wasn’t for these machines the modern computing era may never have even started.

One of the most important things we have learned so far is how business machines changed life dramatically. They reduced the number of human computers needed. Additionally, they increased productivity and helped the economy. Also, new jobs were created. These machines were used during the war for calculations, but came more into the business world as well. They improved communication and the way of life. […]

Another thing we have learned of importance is Taylorism. This is the process of breaking things down into skill-less steps. This increases productivity and reduces labor costs.

Feminization in the work force [grew in conjunction with Taylorism]. Jobs were given to women in the field for a variety of reasons. First, the positions were viewed as deskilled. Because of this, the jobs did not pay very well. Also, it was easy to train and replace people in these positions.

So far we have covered a lot of materials on precursors of electronic computing. Of all the things we’ve covered, I think the most important aspects to modern computing are: Charles Babbage’s Difference Engine, office machines like the typewriter, and the punch card tabulating machine. With the Difference Engine Babbage wanted to make a machine for making tables that could perform calculations and print [them out]. The Difference Dngine also led to the idea of the Analytical Engine which could do all the Difference Engine could do but more complex calculations while having the same logical organization like modern computers. [The importance of the Analytical Engine was that it was designed to be a general-purpose computing device: one that could do any calculation.]

The other most important thing we learned is that the typewriter assists in recording data and documentation of information. The typewriter was a great step forward to further develop [ways of automating] copying and document creation as well as to record [and save written] data.

Another thing we learned about were punch card tabulating machines. [The first important one] was used for the 1890 census [and] used coded cards for age, gender and other information. The clerks punched holes in each card to enter information. [Then, the millions of cards–one per person in the US–were sorted and tabulated using Hollerith’s machines.] This method helped speed up the process and was less expensive [than doing all the work by hand].

We’ve had a lot of discussion on the invention of today’s electronic computers. One of the things we have learned so far was the progressive stages in the invention from simple mechanical machines to what it is today. Computation used to be done manually [and through the mid 20th century] it was done by humans who were actually called the ‘computers’. Large scale computing was first done using mathematical tables; this was followed by the invention of the first set of Adding Machines – the Arithmometer, etc. There has been a lot of improvement over the years that lead us to where we are today in terms of electronic computing.

Another thing is the contributions (and the contributors) to the inventions, as well as the healthy competition that further propelled the innovations. Individuals like Thomas de Colmar – who invented the Arithmometer in 1820, Charles Babbage, and many others. Companies like Remington Rand, National Cash Register, Burroughs, IBM, and many others. [What about these contributors?]

We also learned the impact of mechanization and computerization on War activities and the labor market. The Colossus and the ENIAC were useful tools that helped military activities during World War II. Also, there was a shift in the human labor as a result of new invention that created jobs for women. [Many jobs] like typing, [machine operation, programming], etc. became [seen as suitable for] women [early on].

In the world of technology, there is always a gradual progression with each idea and creation that aids in making the next even better. One of the first mechanical machines was the typewriter. [The first widely successful typewriter was introduced in] 1874 by the Remington Company. Throughout the years after that, the typewriter continued to be perfected by becoming more faster and more efficient than anything else before. The typewriter introduced people to how much one machine could accomplish and how fast.

The founding of the IBM (International Business Machine) was also very important in the history of technology. In the 1920s they became a very dominant company. IBM machines were found nearly everywhere and because they were rented by business owners, they provided a steady income for IBM. In the great depression this company suffered some but this all changed in 1935. Under Roosevelt’s’ New Deal, it was required that a record of employment be kept for the whole working population. Soon enough, IBM had more business than any other office machine company in the world.

Lastly, the invention of Mark 1 took technology to another level. It was the first [important, general-purpose] electro-mechanical computer. […] The machine was a calculator that could add, subtract, multiply, do logarithms, and trigonometrical functions. But it couldn’t do much else. Although some people say that Mark 1 was a technical dead end, other say it was an “icon for the computer age.” The significance of Mark 1 does not lie on its functionality, but in its ability to act as a training ground for [programmers].

Speed has been an important issue that kept coming up with the precursors of the electronic computers. Before the electric computers there were the mechanical computers and the human computers. At the beginning the human computers would perfect their skills to calculate at speed far beyond the average individual. These human computers were clerks, accountants, and more. This was followed up by utilizing humans with mechanical devices that made calculation much more precise and faster. The machines that made calculations much faster were the calculation engines, or punch card programmed mechanical machines.

Government intervention is necessary for funding and practice. As the potential of the electric computers’ precursors was not well recognized it still proved to be very useful to the government. It began when the government needed a faster way of completing the United States census. It then continued when the government needed to make calculations for projectiles, communication purposes, and navigational purposes. The government overall provided the funding to complete some of the analyzing engines and a practice for the analyzing engines to show their potential.

[…]

One of the most important things we’ve learned about so far is the failure of Charles Babbage. While initially successful with the Difference Engine, he became over-ambitious by trying to sell the British government on the Analytical Engine, before finishing the Difference Engine. Unfortunately, Babbage was ahead of his time and the British government denied him [further] funding. Had he actually finished the Difference Engine, history [may] have taken a drastically different course. With a finished and working Difference Engine, Babbage would probably have gotten funding for the Analytical Engine from the British government or other sources.

Another important thing we have learned about is the telegraph. The telegraph is important because it revolutionized how people communicate, much like how the internet revolutionized communication in the late 20th century. The initial infrastructure was set up by rail companies, in order to ensure that trains running in opposite directions would not enter single-track sections at the same time. When people found out about the communication speeds telegraphs were capable of, demand grew exponentially. The telegraph system was also the first large scale electrical network to be managed.

The third most important thing we’ve learned about was the 1890 U.S. census. This census was significant because it used a machine instead of human calculators to process the data. The inventor of the machine used was Herman Hollerith, and the machine itself processed the data from [millions of] punch cards [which were laboriously prepared, one by one, by people using special punching devices]. [With the help of this] machine, it only took two and a half years to complete the census, versus the seven years it took for the previous census. It was also determined that the Hollerith system saved the U.S. government over $5 million [on the 1890] census.

There are many important points and events in the history of […] computing. However, I will talk about the three I believe were the most important. The first one is the Babbage’s Difference Engine of 1822. Babbage came up with this idea for calculating nautical [tables] for sailors using machinery instead of human brain power. This was the first attempt at[automating very complex] problem solving. Being the first attempt, there were bound to be problems and complications, but it was a huge [theoretical] step forward in taking problem solving to a whole new level.

Second was the installation of machinery in the workplace in the early 1900s. Burrough’s adding machine (first calculator) and Remington Rand’s typewriter are just some of the many machines introduced to the workforce. By placing these machines in the hands of the businessman […], employers could hire people who didn’t have as much skill […] for a lot less money and the machine would do the work and produce profit much faster than a regular human worker would. Today’s economy operates much the same way because of this.

Last, but not least, was the placement of women in the workforce. [In the late 19th century,] 90%+ of the [clerical] workforce was [men] and they were [seen as more] skilled and [were more] costly to hire and maintain. However, [by the 1920s women entered the clerical workforce in large numbers, and by the 1930s they were the majority of office workers]. [The idea that machines made it possible to hire women and give them] minimal training [to operate] a machine [helped businesses make more] money. These three points I believe are the most important things I learned so far.

I believe that one of the most important aspects of pre-electronic computing we have learned was Charles Babbage’s designs for the Difference [Engine] and the Analytical [Engine] during the 1830’s. Babbage’s first design, the Difference Engine, was created to calculate and produce mathematical tables mechanically, rather than use human computers prone to error, like Baron Gaspard de Prony’s French table-making project. The funding for the project came from the British government, and it took many years and vast amounts of money just to create a prototype. During the construction of the Difference Machine, Babbage designed a machine far more complex and multi-purpose than the Difference Engine called the Analytical Engine, in 1834. It was the first of its kind, being able to be programmed using punch cards. He wanted to abandon the Difference Engine in favor of making the Analytical Engine, but the government instead cut funding for Babbage’s project. Technological advancement of the programmable computer came to a halt because the government wanted tables rather than a machine they saw as [expensive and] useless. Although Babbage’s design for the Analytical Engine was innovative, it was [too] ambitious during its time [because of the complexity of the idea and the complexity of the parts required to build it].

Another thing to consider in the age of nonelectrical technology [were] analog machines. Analog machines were single-purpose physical models of the system they were trying to solve, such as the orrery, a model used to calculate and explain planetary motion. Other analog machines, like Lord Kelvin’s tide-predicting machine and Vannevar Bush’s profile tracer and product integraph, were highly useful in solving only specific [problems]. Bush saw a problem in this and later invented the differential analyzer, but even that machine was useful in solving only a specific set of engineering problems, not varying problems. Analog machines, although helpful in calculating, were obsolete in the fact that they were not multi-purpose.

The final precursor to electrical computing that was significant was Taylorism and the development of office machines. Taylorism, or Scientific Management, was a type of management that aimed to improve efficiency in the workplace [by use of time motion studies and scientific analysis of how long it took workers to complete each task]. Its main goals were to lower costs, speed up production, and reduce the need for training. With machines, such as typewriters, adding machines, cash registers, and punched-card machines, becoming more common in the business world, firms could hire cheaper, less trained labor, [who were often young women,] and at the same time speed up production. This added to the technology-business cycle [explain what you mean here], as more companies like IBM […] were motivated to make more technological advances.

One of the important precursors to electronic computing is the typewriter. The typewriter became a heavily used machine amongst many businesses in the 1880s. It also created many occupations for women. The typewriter is an important precursor to electronic computing because after the invention of the typewriter, many different types of machines were created. The construction of new machines led to electronic computing.

One of the machines that was supposed to be created after the typewriter was Babbage’s Analytical [Engine]. Although it was not completed, others looked to it for ideas. This can be seen through Howard Aiken. Aiken’s interest in the Analytical Machine led to the invention of the Automatic Sequence Controlled Calculator [which was installed at his lab at Harvard in] February 1944 [after it was built at IBM in Endicott, NY]. This was an important precursor to electronic computing because the ASCC was the first [important general-purpose] computer.

An idea that was instrumental in the development of electronic computing was Taylorism. Taylorism is a method of scientific management that was introduced to America in the 1880s. Taylorism allowed for systematization which changed the set up of offices. Machines such as the typewriter and adding machines and methods of organization such as filing systems were heavily adopted in American offices. Taylorism allowed for American businesses to become machine based. The large utilization of machines in businesses [encouraged] the development of new machines, and eventually, [created a fertile market for the] electronic computing [solutions originally designed by governments and universities].

The most important precursors to electronic computing would be the human “computers”. […]

The development of machines such as the Difference Engine and Analytical Engine by Babbage were the next step towards [general purpose electromechanical computers]. Though never developed as Babbage wanted because of insufficient funds [and difficulty machining suitable parts at the time], the idea interested many such as Ada Byron [who described the ways Babbage’s machine might be used].

A final important precursor to electronic computing was the [version of the] typewriter [introduced in the late 19th c.] which lead to the use of the layout of modern day [QWERTY] keyboards. As typing became [a job, it became feminized] and women labor [was used because it was inexpensive]. Companies had already developed typewriters but Remington, a former gun company, decided to expand on the idea and improved the function and capability of typewriters so that they [far] surpassed the speed of [handwriting].